TLDR

- The November 2025 outage originated from a major Cloudflare infrastructure failure affecting routing, DNS resolution, and API gateways.

- AI platforms like ChatGPT, Perplexity, and Claude went down quickly because LLM queries depend on real-time, high-frequency edge routing.

- The issue triggered widespread 500 errors and timeouts as Cloudflare’s network stopped forwarding requests to origin servers.

- Unlike regular websites that can rely on cached content, AI systems cannot serve cached outputs, making them more fragile during network disruptions.

- The outage exposed how dependent global AI companies are on single-provider edge networks for traffic distribution.

- The incident highlighted the need for multi-CDN strategies, distributed model routing, and more resilient AI infrastructure architectures.

What This Article Covers and Why It Matters for AI-Driven Companies

The internet doesn’t break often. But when it does, the world suddenly sees how much of modern digital life depends on a small number of invisible infrastructure players. That’s exactly what happened on November 18, 2025, when Cloudflare - one of the world’s biggest edge and security networks - experienced a major disruption that rippled across the global web.

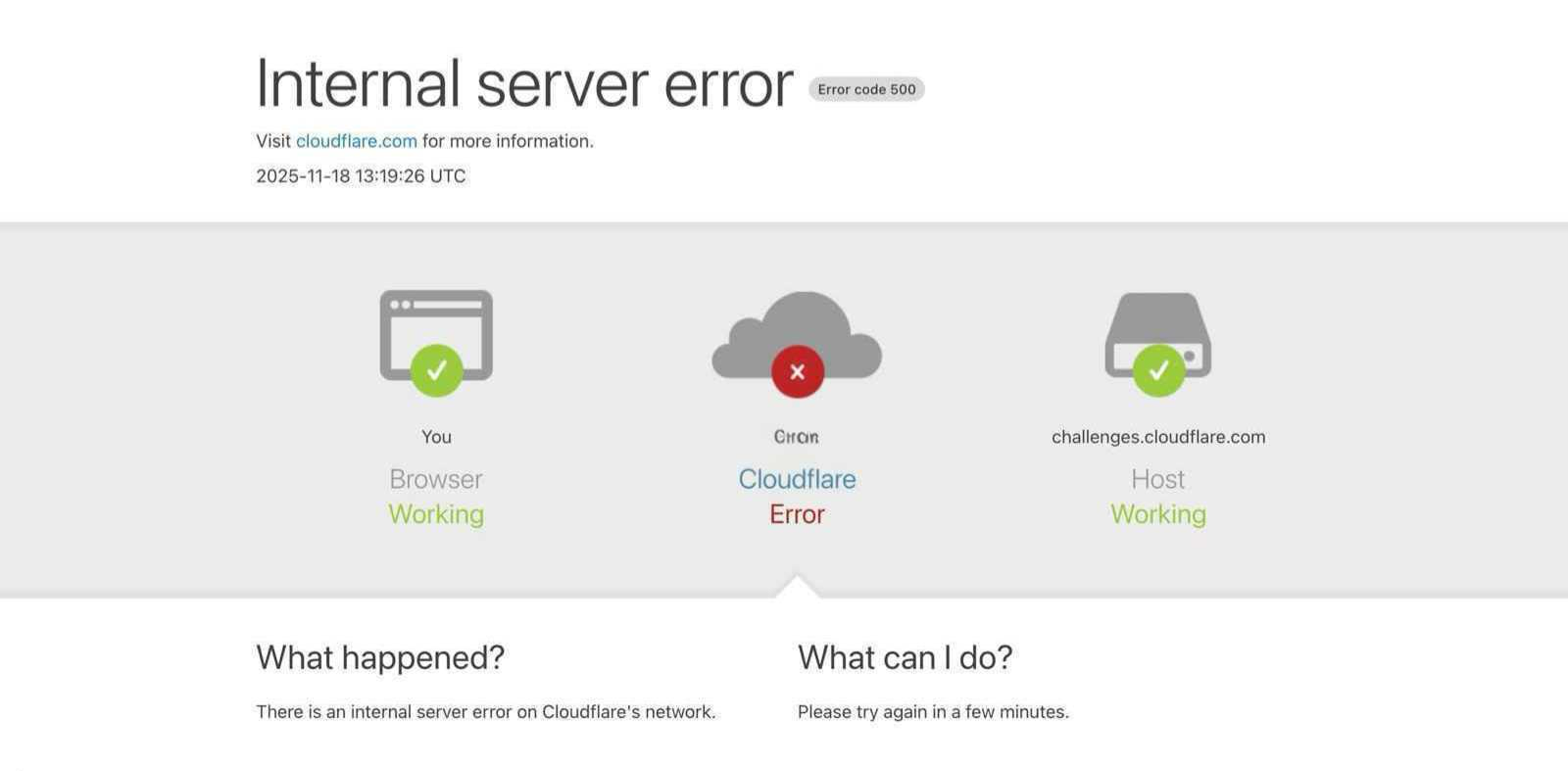

AI platforms were among the hardest hit. Users trying to access ChatGPT, Perplexity, and other LLM interfaces reported endless loading, 500-errors, or Cloudflare challenge failures. And while the models themselves were healthy, most requests simply never made it far enough to reach them.

For generative-AI companies, this outage was more than an inconvenience. It was a clear reminder that great AI experiences aren’t powered by models alone. They rely on a dependable, resilient infrastructure stack. And if the first layer collapses, everything above it becomes unreachable.

This article breaks down the incident, why LLM-based platforms were disproportionately affected, what the timeline looked like, and - most importantly - what resilience lessons AI companies must learn.

All information is based on verified public reports from AP News, Reuters, The Guardian, and other reputable sources.

What Went Wrong: A Clear Breakdown of the Incident

Cloudflare publicly confirmed that the disruption stemmed from “a spike in unusual traffic” starting around 11:20 AM UTC, which created widespread routing instability and triggered internal server errors across customer-facing sites.

This was reported by multiple outlets including The Guardian, which highlighted Cloudflare’s explanation of anomalous traffic levels causing the disruption.

To understand the technical nature of the issue:

- Routing congestion began at the edge layer, where Cloudflare processes inbound traffic before forwarding it to origin servers.

- 500 Internal Server Errors cascaded globally, affecting websites, APIs, and interactive applications.

- Some Cloudflare services recovered faster than others - AP News reported that Cloudflare’s Access product and its WARP VPN saw early restoration, while other components continued to show elevated error rates.

- Regional behavior varied. The Guardian noted that Cloudflare disabled its WARP service in London temporarily to stabilize traffic.

This wasn’t a single-application problem. It was a systemic edge-level breakdown - and that matters a lot when your product relies on low-latency API calls, real-time streaming, or AI inference.

Why AI and LLM Services Bore the Brunt

Although the outage hit many high-traffic services, AI platforms experienced visible and immediate failures. Users attempting to access ChatGPT shared screenshots of “Please unblock challenges.cloudflare.com” errors, as reported by Reddit users on r/ChatGPT.

The reason AI services suffered more than content or e-commerce platforms comes down to unique architectural characteristics.

Here are the core factors:

Heavy Dependence on Edge-Level Routing

AI applications require every user prompt to reach the backend API. Unlike static websites, nothing meaningful can be cached at the edge.

If the edge collapses, every request fails instantly.

No Cached Output

LLMs generate dynamic answers. They cannot rely on CDN caching the way a blog page or an image file can.

This means AI services have almost zero fallback capacity during a CDN or edge outage.

Authentication and Abuse Checks Happen at the Edge

AI platforms use Cloudflare for:

- Token verification

- Rate limiting

- Bot detection

- Safety checks

When these services fail, requests are dropped before reaching the model.

Single Point of Failure

Many AI teams rely exclusively on a single edge provider.

This creates an infrastructure bottleneck: if the ingress fails, the entire AI system becomes unreachable - even when model servers remain completely healthy.

Lack of Edge Observability

Typical AI observability stacks monitor inference latency, token throughput, GPU load, and origin API health.

Edge failures often do not appear in these dashboards, creating a confusing situation where everything internally looks healthy while the user experience is broken.

Together, these factors explain why AI apps were among the first and most visible victims of the Cloudflare outage.

Timeline of the November 18, 2025 Outage

Based on consolidated reports from The Guardian, AP News, Reuters, and Cloudflare communications, here is the clearest timeline available:

This timeline highlights how rapidly the disruption unfolded and how long the tail of the outage lasted.

The Real Impact: What This Means for Modern AI Businesses

The disruption caused ripple effects across industries. For generative-AI companies - especially those with customer-facing products - the implications are significant.

Here are the most important lessons.

User Trust Risks

When AI tools stop responding, people get frustrated instantly because AI interactions are conversational and expectation-driven.

Inconsistency damages brand reliability and retention.

SLA Breach Risks

Enterprise clients expect uptime guarantees.

If your AI tool goes down because your edge provider failed, you still risk SLA penalties unless your contract specifically excludes third-party outages.

Operational Stress

Outages force engineering and product teams into immediate escalation mode - investigating logs, validating internal systems, and responding to customer queries - all while the root cause lies outside their control.

Competitive Differentiation

Resilience becomes a selling point.

AI providers who architect for failure earn trust faster than those relying on a single provider.

Strategic Infrastructure Risks

A single point of failure at the edge can undermine millions of dollars of investment in LLM infrastructure, GPUs, R&D, and customer onboarding.

In short: AI companies cannot treat edge providers as invisible. They must treat them as critical dependencies requiring redundancy.

Key Strategies to Build a More Resilient AI Architecture

Consultadd recommends a structured, multi-layer resilience strategy. Each point below includes both the summary and a deeper explanation for practitioners.

Multi-CDN and Multi-Edge Architecture

- Distribute ingress across multiple providers (Cloudflare, Akamai, Fastly).

- Use health-based routing to automatically fail over when one provider experiences issues.

This ensures that a single provider's failure does not block user prompts.

Graceful Degradation Modes

- Cache common or repeated answers where possible.

- Provide a limited-interaction fallback mode instead of a broken UI.

Users should never see a dead interface.

Intelligent Retry Logic

- Implement exponential backoff with jitter in client SDKs.

- Use idempotency keys for safe retries without duplication.

This prevents overload during partial outages and maximizes successful request delivery.

Expanded Edge Observability

- Track real-time metrics for edge errors, DNS lookup failures, and regional latency.

- Maintain geographically distributed synthetic probes.

This gives teams early warning before users even notice issues.

Status Pages on Independent Infrastructure

Your status domain must survive even if your primary edge provider is down.

Having a separate DNS path and CDN ensures communication continuity.

Routine Chaos Testing

Periodically simulate a CDN-level outage to test:

- Failover systems

- User experience

- Logging and alerting pathways

- Support team readiness

This is how platforms become battle-tested.

Post-Incident Analysis and Governance

Every disruption requires a documented review so architectural changes are implemented, not postponed.

How Consultadd Helps Companies Build AI Systems That Don’t Break Easily

Consultadd specializes in building next-generation generative-AI products - but equally important, we engineer the infrastructure foundation that makes them dependable.

Our expertise includes:

- Designing multi-CDN and multi-edge ingestion pipelines

- Creating fallback modes and cache-intelligent flows for LLM tools

- Architecting secure, fault-tolerant API front layers

- Implementing observability dashboards aligned with AI workloads

- Conducting resilience audits and chaos engineering drills

- Supporting AI product teams in end-to-end deployment

For companies that want AI systems that perform reliably at scale, resilience isn’t optional - it’s a competitive advantage.

Final Thoughts

The November 2025 Cloudflare outage exposed an uncomfortable truth: the AI revolution is only as strong as the infrastructure it stands on.

Models may be powerful, GPU clusters may be fast, and user bases may scale rapidly - but a failure in the first mile of traffic routing can render an entire AI platform inaccessible.

For generative-AI companies, resilience is now an essential part of the product strategy.

Consultadd helps organizations build AI ecosystems that remain reliable even when the internet’s foundations shake. If your team is planning to scale an AI product or strengthen your infrastructure resilience, we’re here to help.

Frequently Asked Questions

1. Was this outage caused by a cyberattack?

Cloudflare has not confirmed any malicious activity. The Guardian reported that the company attributed the incident to a sudden spike in unusual traffic patterns, not an attack.

2. Why were AI platforms hit harder than regular websites?

Because LLM requests cannot be cached, validated, or partially served during outages.

Every prompt requires full, live routing.

3. Were ChatGPT or Perplexity themselves down internally?

No. According to public data and user reports, the model infrastructure remained healthy.

Requests simply couldn’t reach the origin because the edge layer was failing.

4. Can AI companies fully prevent this sort of outage?

Not completely. But they can significantly reduce impact through multi-edge routing, redundancy, observability, and proper failover design.

5. What’s the cost of multi-CDN architecture?

Higher than relying on a single provider, but dramatically lower than the cost of customer churn, SLA penalties, or brand damage during outages.

6. How soon should companies invest in resilience?

Immediately. The longer businesses wait, the more technical debt accumulates - and the higher the consequences when an outage strikes.